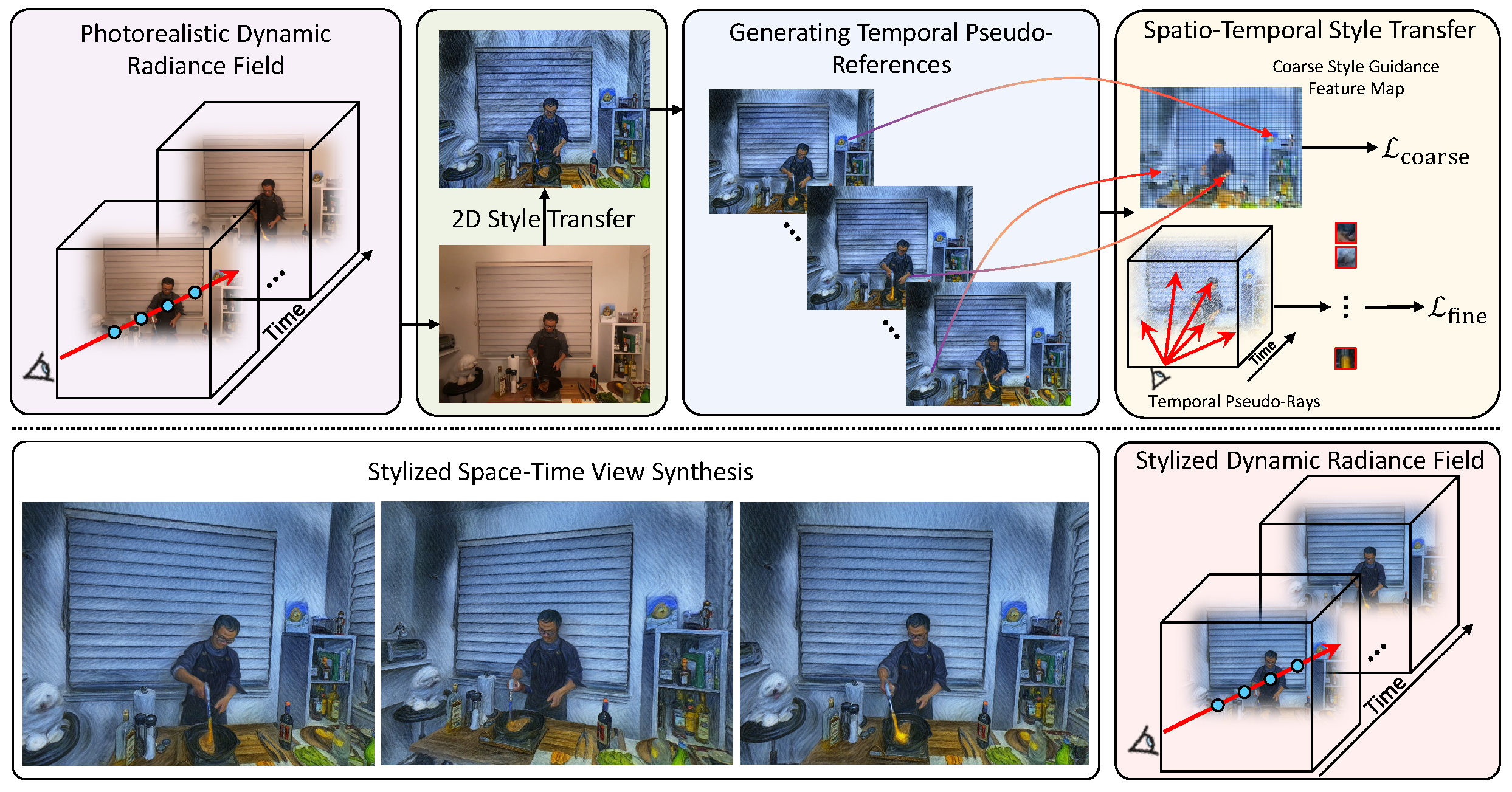

Method

Given a pre-trained photorealistic dynamic radiance field, we first render a reference view at time k from a specific reference camera. Following that, the reference view undergoes a 2D style transfer using an appropriate method, e.g., manual editing, NNST, or ControlNet, to produce a stylized reference image. To propagate the style information from the stylized reference to other timestamps, we generate temporal pseudo-references and apply spatio-temporal style transfer to optimize our dynamic radiance field. Once this stylization is done, we can yield plausible stylized results of space-time view synthesis on dynamic 3D scenes.